Lab 05: Logistic Regression + KNN Classification

Introduction

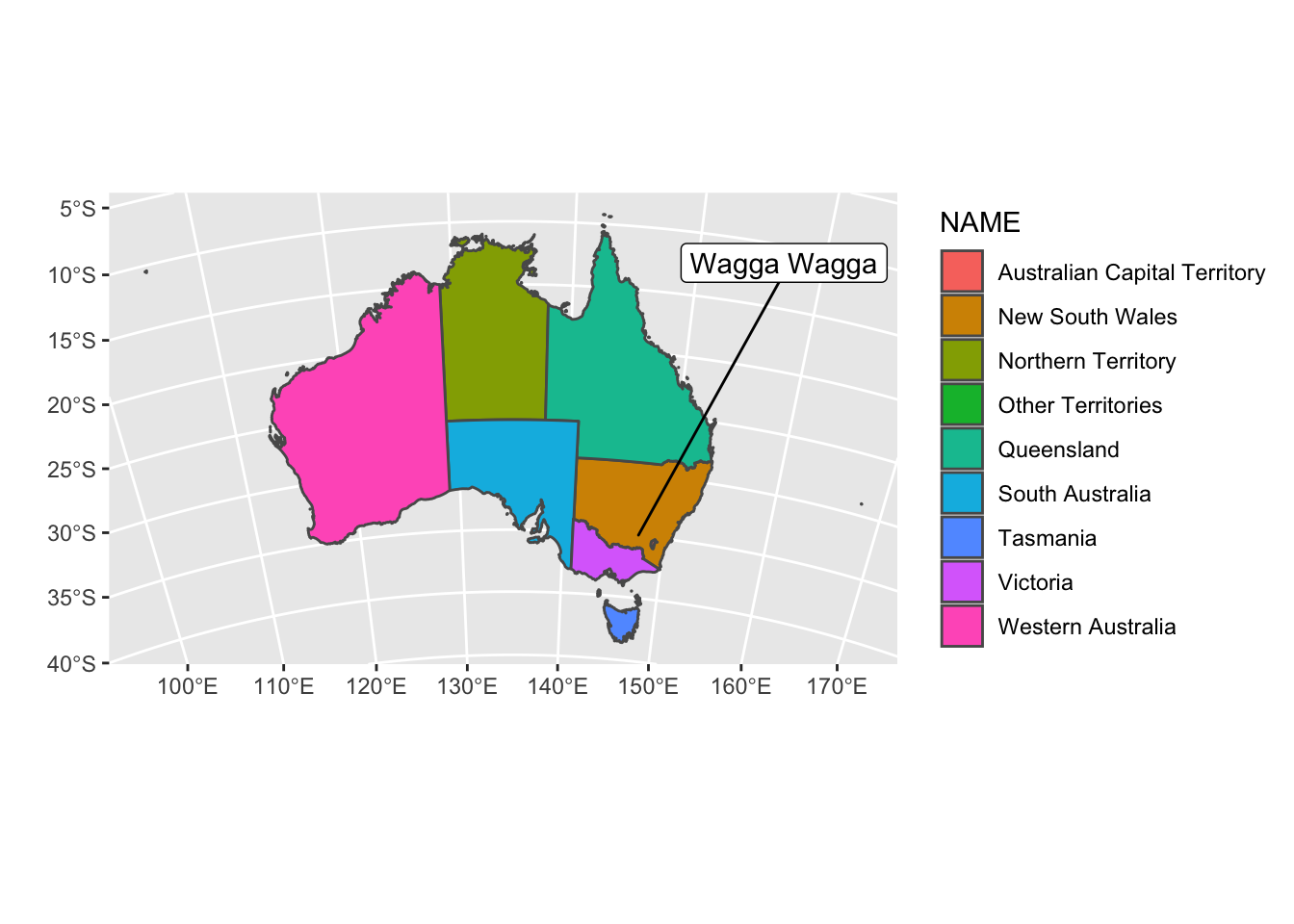

We will try to predict if it rained the next day in Wagga Wagga, Australia given certain environmental and climate conditions the previous day. Our data, obtained from Kaggle, consist of measurements of these variables on Fridays from 2009-2016.

The variables are as follows:

Evaporation: The so-called Class A pan evaporation (mm) in the 24 hours to 9amSunshine: The number of hours of bright sunshine in the dayWindSpeed3pm: Wind speed (km/hr) averaged over 10 minutes prior to 3pmHumidity3pm: Humidity (percent) at 3pmPressure3pm: Atmospheric pressure (hpa) reduced to mean sea level at 3pmCloud3pm: Fraction of sky obscured by cloud (in “oktas”: eighths) at 3pmTemp3pm: Temperature (degrees C) at 3pmRainTomorrow: “yes” or “no” for whether it rained the next day

Data

First, load in your data located in the weatherWaggaWagga.csv file. We will consider RainTomorrow = "yes" to be the success class.

Pick one quantitative variable and create side-by-side boxplots that display the distribution of that variable for each level of RainTomorrow. Also display a table of the total number of successes and failures in the data. Interpret your plot, and comment on the table.

Part 1: validation set approach

We will compare the performance of logistic regression with that of KNN classification using a validation set approach.

Test/train split

First split your data into an 80% train and 20% test set using a seed of 41.

Logistic regression

Fit the model

Fit a logistic regression to the training data for RainTomorrow using all the remaining variables in the dataset. Display a summary of the model, and interpret the coefficient for the variable you chose to visualize in the EDA section. How, if at all, is the probability of it raining tomorrow in Wagga Wagga associated with that predictor?

Hypothesis tests of the form \(H_{0}: \ \beta_{j} =0\) vs \(H_{a}: \ \beta_{j} \neq 0\) can be formulated for the coefficients in logistic regression just as they are in linear regression!

Predict

Now, obtain predicted labels for the test data using a threshold probability of 0.5. Importantly, because the data are in "yes"/"no" and not 1/0, your predictions should also be in terms of "yes"/"no".

Then create a confusion matrix for the test set. Using code that is as reproducible as possible, obtain and report the misclassification rate, false negative rate, and false positive rate for the data. This can be achieved using either tidyverse or base R.

Comment on which is larger: your FPR or your FNR. Do these values make sense to you given the data?

KNN Classification

Now we will fit a KNN model to predict RainTomorrow using K = 20 neighbors. Set a seed of 41 in case there are ties. As our predictors are on completely different scales, first properly standardize your train and test data sets before fitting the model. You may either use your own implementation of KNN classification, or you may use the knn() function from the class library. Paste any functions you may need in the chunk labeled functions at the top of the document.

Under this model, create a confusion matrix for the test set. Using reproducible code, obtain and report the misclassification rate, false negative rate, and false positive rate for the data.

How do your rates and the predicted labels themselves compare to those obtained under the logistic regression model?

Part 2: stratified k-fold CV

At this point, we know that k-fold CV is a better approach to estimating the test error compared to a validation set approach. For this lab, we will implement stratified k-fold CV. In stratified k-fold CV, the proportions of each label in each fold should be representative of the proportion of each label in the entire data set.

Create folds

Here, create your list of fold indices for stratified k-fold CV using 10 folds of roughly equal size. Set a seed of 41 again. “Roughly equal” means that either all 10 folds are the same size, or 9 folds have the same size and the tenth fold has a little less.

Your code should be as reproducible as possible! That means it should not be specific to this specific data or number of folds. This is the most difficult part of the lab, so think carefully about what you want to do! It could be helpful to write down your thoughts.

Logistic regression

Now, fit the logistic regression model again, but now using the stratified 10-fold CV approach to estimate the test error.

Report the estimated test misclassification, false negative, and false positive rates under this model.

KNN classification

Once again using K = 20 neighbors and 10 folds, fit a KNN classification model with stratified k-fold CV using standardized predictors. Set a seed of 41 again.

Report the estimated test misclassification, false negative, and false positive rates under this model. How do the prediction performances of your two models compare based on the estimated test error using stratified 10-fold CV?

Part 3: Comprehension

- I also obtained estimates of the test error rates when performing regular 10-fold CV (i.e. non-stratified). The following shows my results:

| Misclass. rate | FNR | FPR | |

|---|---|---|---|

| Logistic | 0.1114 | 0.4322 | 0.0313 |

| KNN | 0.1229 | 0.6028 | 0.0106 |

Based on these results, what comparisons (if any) can you make about the performance of the models fit here and the models fit in Part 1 or Part 2? What is the correct way to explain why the results I obtained here are different than the rates you obtained via stratified k-fold CV (apart from the simple fact that we used different fold ids)?

- Compare the magnitudes of your estimated misclassification test error rates when using the validation set approach vs the stratified k-fold CV approach. Does this make sense to you? Why or why not?

- In this problem, do you think a false positive or a false negative rate is worse? Based on your answer, should you increase or decrease the threshold? Why?

Submission

When you’re finished, knit to PDF one last time and upload the PDF to Canvas. Commit and push your code back to GitHub one last time.